Generative AI: Data Challenges and Solutions

What challenges does generative face with respect to data – What challenges does generative AI face with respect to data? Generative AI models, capable of creating realistic and novel content, are revolutionizing industries. However, their development and deployment are heavily reliant on data, and this reliance brings forth a range of challenges.

From data bias and privacy concerns to the need for high-quality and accessible data, generative AI models must navigate a complex landscape of data-related issues. This article delves into these challenges, exploring their implications and potential solutions.

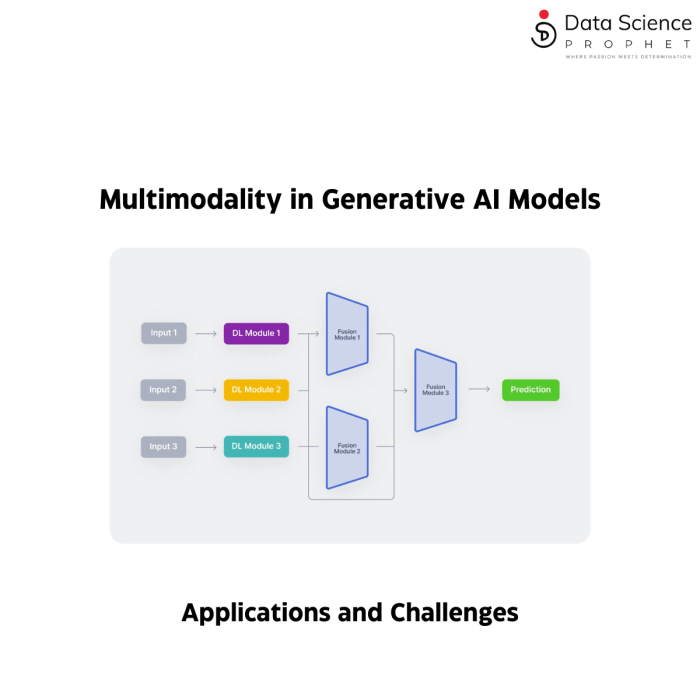

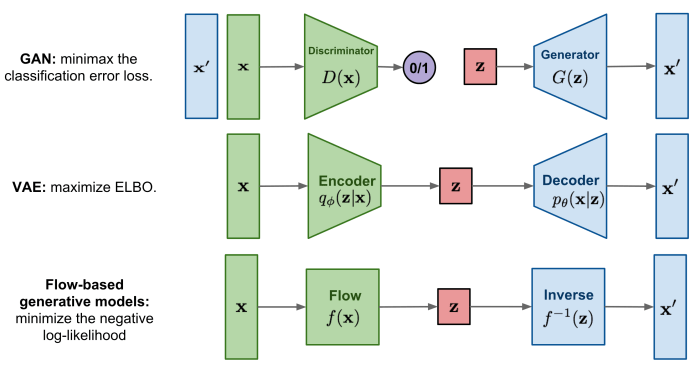

Generative AI, powered by deep learning algorithms, excels at mimicking patterns and creating new data that resembles the data it was trained on. This ability has led to remarkable advancements in areas like image and text generation, music composition, and even drug discovery.

However, the success of these models hinges on the quality and characteristics of the training data.

Data Quality and Integrity: What Challenges Does Generative Face With Respect To Data

Generative models are susceptible to the quality of data they are trained on. Noisy, incomplete, or inconsistent data can significantly impact the performance and reliability of these models. This section delves into the challenges posed by such data and explores techniques to mitigate these issues.

Data Cleaning and Pre-processing Techniques

Data cleaning and pre-processing are essential steps in preparing data for generative model training. These techniques aim to remove noise, handle missing values, and ensure data consistency.

- Missing Value Imputation:Techniques like mean imputation, median imputation, or using predictive models can be used to fill in missing values. This ensures that the model doesn’t encounter gaps in the data during training.

- Outlier Detection and Removal:Outliers are data points that significantly deviate from the general trend. They can distort the training process and lead to inaccurate results. Techniques like box plots, z-scores, or clustering can be used to identify and remove outliers.

- Data Normalization:Scaling data to a common range can improve the performance of some algorithms. Techniques like min-max scaling or standardization can be used to normalize the data.

- Data Transformation:Applying transformations like logarithmic or square root transformations can improve the distribution of data and make it more suitable for model training.

Evaluating the Quality of Generated Outputs

Evaluating the quality of generated outputs is crucial to assess the effectiveness of data cleaning and pre-processing techniques. This involves examining the generated data for accuracy, consistency, and adherence to the expected distribution.

- Visual Inspection:Examining generated images or text visually can provide insights into the quality of the output. This can help identify anomalies, inconsistencies, or biases in the generated data.

- Quantitative Metrics:Metrics like Inception Score (IS) and Fréchet Inception Distance (FID) are commonly used to evaluate the quality of generated images. For text data, metrics like perplexity and BLEU score can be used.

- Domain Experts’ Evaluation:Involving domain experts in evaluating the generated data can provide valuable insights into its accuracy and relevance. They can assess whether the generated data is consistent with real-world knowledge and expectations.

Data Scalability and Efficiency

Training generative models on massive datasets presents unique challenges. The sheer volume of data requires efficient storage and processing techniques to ensure effective model training. This section explores strategies for optimizing data handling and scaling training processes for large datasets.

Data Storage Optimization

Efficiently storing massive datasets is crucial for generative model training. Here are some methods for optimizing data storage:

- Cloud Storage Solutions:Cloud storage platforms like Amazon S3, Google Cloud Storage, and Azure Blob Storage offer scalable and cost-effective solutions for storing large datasets. These platforms provide high availability, data redundancy, and security features, ensuring data integrity and accessibility.

- Data Compression Techniques:Employing compression techniques like gzip or bzip2 can significantly reduce the storage footprint of datasets. These techniques compress data without losing information, allowing for efficient storage and retrieval.

- Distributed File Systems:Distributed file systems such as Hadoop Distributed File System (HDFS) and Apache Spark are designed to handle massive datasets by distributing data across multiple nodes. This approach enables parallel processing and improves data access speeds.

Data Processing Optimization, What challenges does generative face with respect to data

Efficient data processing is vital for training generative models on large datasets. Here are some methods for optimizing data processing:

- Parallel Processing:Leveraging parallel processing frameworks like Apache Spark or TensorFlow allows for distributing data processing tasks across multiple processors or nodes, significantly reducing processing time. This approach is particularly effective for large datasets.

- Data Pipelines:Implementing data pipelines with tools like Apache Airflow or Luigi streamlines data processing workflows. These tools enable scheduling, monitoring, and automation of data processing tasks, ensuring efficient data flow and transformation.

- Data Augmentation:Data augmentation techniques, such as random cropping, flipping, and color jittering, can artificially expand the dataset by generating variations of existing data. This helps improve model generalization and robustness.

Scaling Generative Model Training

Scaling generative model training involves adapting training strategies to handle large-scale datasets. Here are some strategies:

- Distributed Training:Distributing training across multiple GPUs or nodes allows for faster model training. Frameworks like Horovod and TensorFlow Distributed provide tools for parallel training on multiple devices.

- Model Parallelization:Techniques like model parallelism divide the model into smaller parts, which are trained on different devices. This approach enables training larger models with more parameters, enhancing model capacity.

- Gradient Accumulation:Instead of updating model weights after processing each batch of data, gradient accumulation accumulates gradients over multiple batches before updating weights. This technique reduces memory usage and allows for training with larger batch sizes.

Conclusive Thoughts

As generative AI continues to evolve, addressing data challenges becomes increasingly critical. By tackling bias, ensuring privacy, and optimizing data quality and accessibility, we can unlock the full potential of generative AI while mitigating its risks. This will pave the way for a future where AI-generated content is both innovative and responsible, benefiting individuals and society as a whole.